When AI met Strategy

AI and strategy are casually flung together into far too many sentences these days. Usually during meetings where a lot is spoken, but little is said. Here I make connections between the two and see where that takes us.

I am using AI as a placeholder for all sorts of analytics, from the most basic to the most advanced. As intelligence, defined broadly, that resides outside brains. Slightly inaccurate in some contexts, but a lot more efficient (not to mention, trendy).

As to what strategy is, not too many people agree on it. Some define it as the ‘how’ you will do things. One of my professors used to call it a ‘sustainable competitive advantage’. (Buffet and Munger would just call that a moat). Musashi was talking about sword fighting when he talked about it. Sun Tzu was talking about war in general. Avinash Dixit and Barry Nalebuff wrote a book about game theory and called it Art of Strategy, so one can guess what their opinion is. So let’s just skip a rigorous definition and contend with a sense of strategy as some set of ideas which are more abstract than tactics, and are more concrete than a vision, with the precise meaning being contextually agreed upon. After all, the Tao that can be named, is not the Tao.

However, one thing stands out as common — it is always about doing the right things while in conflict with others. And if there’s one field which has grappled with conflict the most it’s the military. Military models are tested in the unforgiving laboratory of armed conflict and have to be replaced if they aren’t helping. No wonder then that a lot of strategic thought came out of military strategy.

Some might take issue with the comparisons of business to war. I agree — you don’t usually see people in suits chasing others with axes in hand. But fields need not be exactly similar to transplant ideas. Besides, one must admit that it is, regardless of intensity, still a competition, with the violence at the abstract level of organizations.

“What is strategy? A mental tapestry of changing intentions for harmonizing and focusing our efforts as a basis for realizing some aim or purpose in an unfolding and often unforeseen world of many bewildering events and many contending interests.” — John Boyd

Which brings us to John Boyd, who is widely considered to be one of the greatest military strategists. Most people haven’t heard of him. Probably because he didn’t write a lot of articles or books, preferring to give briefings instead. In the only paper he did write, Creation and Destruction, he brought together ideas from thermodynamics, Heisenberg, and Gödel in the short span of eleven pages, to make the point that we should be constantly breaking and making mental models. In his briefing Patterns of Conflict, he delivered the principles he figured out by studying conflict throughout history. This became the basis for US military strategy, especially for the Marine Corps.

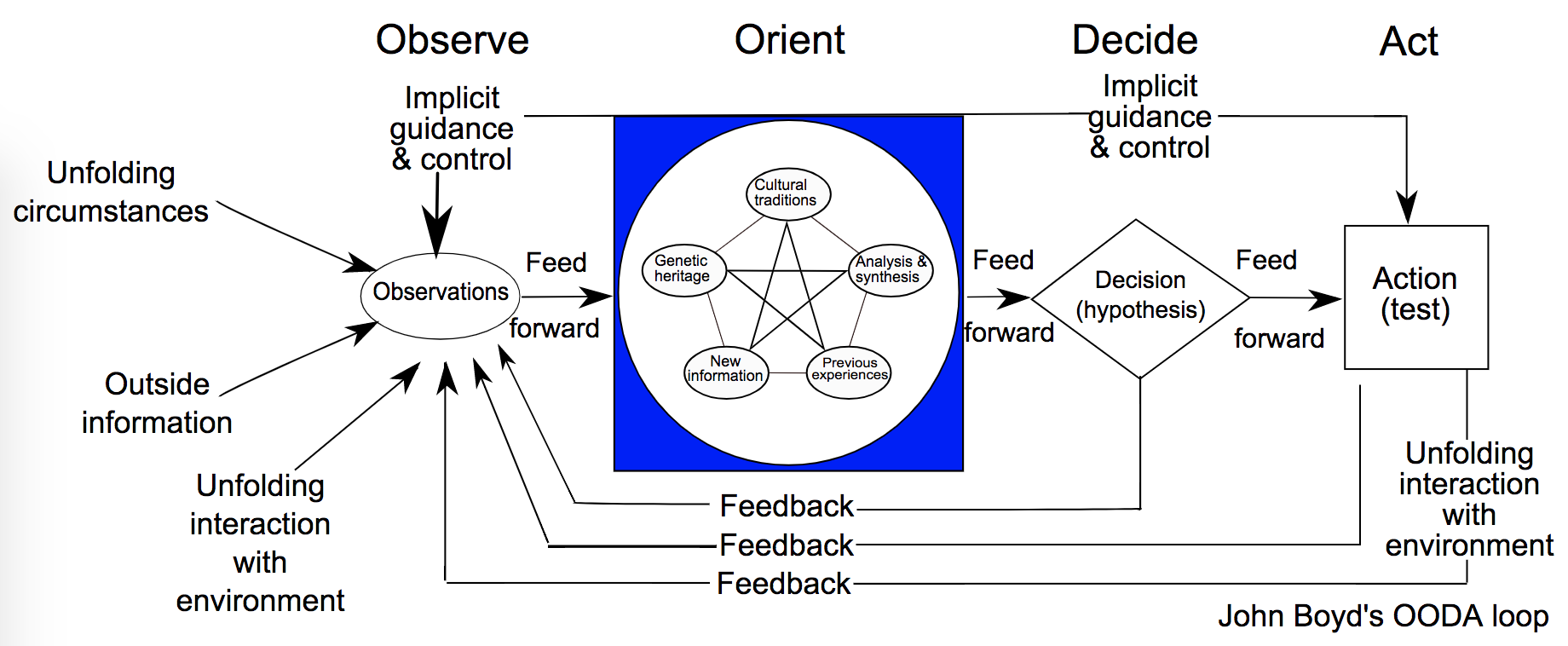

And at the core of all that is a deceptively simple decision making framework called the OODA (Observe-Orient-Decide-Act) loop. A naïve representation of this might be four boxes (appropriately rounded and tastefully colored, of course), arranged in a circle with arrows going from one to the other. But feast your eyes upon the elaborate mandala that the real one was conceived as.

“Note how orientation shapes observation, shapes decision, shapes action, and in turn is shaped by the feedback and other phenomena coming into our sensing or observing window. Also note how the entire “loop” (not just orientation) is an ongoing many-sided implicit cross-referencing process of projection, empathy, correlation, and rejection.” — John Boyd

At the risk of being simplistic, the basic idea is that whoever can go through the loops best wins. The Boydians have terms like fast transients and getting inside the enemy’s loop and tempo for this. Tempo is different from just speed. It’s how fast you can switch maneuvers, not just complete them. You might actually have lesser tempo when going at a higher speed, making you more predictable and susceptible to attack. A classic example is of the F86 vs MiG15 in the Korean War. This is sort of the apple-falling-on-the-head moment for Boyd. The F86s shot down ten times as many of the technically superior, faster MiG15s. The brass attributed it to better training, but much later Boyd credited the F86’s bubble canopy and the hydraulic systems — better observation/orientation, and faster maneuver transitions.

“The ability to operate at a faster tempo or rhythm than an adversary enables one to fold the adversary back inside himself so that he can neither appreciate nor keep up with what is going on. He will become disoriented and confused…” — John Boyd

Anyway, let’s not dwell on the loop much further. For now, just know, that despite the apparent obviousness of the loop, the implications of it are very instructive in the conduct of conflict, and give rise to the ideas which are at the core of successful movements like maneuver warfare, Agile, and Toyota Production System.

Now, the reason why this all this is pertinent is that the point of all your AI, contrary to what many believe, is not just to impress your date or secure VC funding, it is to make better, faster, cheaper decisions. And we just saw how one of the deepest strategy framework is at its heart a decision-making framework.

The point of AI is to make better, faster, cheaper decisions.

This is why the use of AI can be strategic — because it enables you to go through your loops better by helping you improve at least one of the four stages of the loop.

(By the way, Russell and Norvig listed the following as the elements of AI: perceive, understand, predict, manipulate. Sounds familiar?)

Realizing this connection has benefits. We have a direct sense of how AI can be strategic. We can now think more clearly about the value that is being added with the analytics. Looking at the four stages can help in thinking about solutions. And more generally, thinking in terms of decisions to be made, opens up a wide variety of decision making tools for us to use, not just Deep Learning (TM). You are freed to turn to operations research, graph theory, behavioral economics, psychology or whatever else fancies you, all in pursuit of better, faster, cheaper decisions.

Having a quiver full of bespoke arrows is way better than a blunt hammer to use on everything, but how can we choose what to deploy when?

Can types of AI be matched against the stages?

The first thing that comes to mind might be to say that you would use descriptive analytics to observe/orient, predictive analytics to orient, and prescriptive analytics to decide and automation to act.

Is this always true, though? Suppose deep learning was used to process satellite imagery and the output was shown to a human who decided whether to bomb an area. Or suppose an ML model generated a revenue forecast which was fed into a dashboard for the decision maker. In both the cases the fancier techniques were inputs to plain old BI! (Of course, this has got to do with your frame of reference too. If you see your scope as the delivery of the revenue forecast, coming up with that number is the extent of your decision. Your OODA is dependent on how zoomed in or out you are.)

This is where those pyramids you keep seeing all the time are misleading. You know the ones I’m talking about, they all go descriptive-predictive-prescriptive or BI-ML-AI, and it’s neither clear why these triangles must be called pyramids, nor why the higher stages must be smaller than the lower. Let me not waste article space by showing them, but if I did have the zeal to search for usable images, I’d plaster them with big, fat, probably red crosses or question marks before pasting here.

Because that need not be the order of creation. That need not be the order of value. That need not be the order of distance from decision. At best it’s the order of analytical sophistication, which might make it mildly useful as a learning plan. At worst it is the cause of money down the drain. Indeed, in line with Moravec’s paradox, O-O might use more sophisticated methods than D-A.

Descriptive analytics might drive high-value decisions just by surfacing the relevant information in an easily consumable way. You can do prescriptive analytics before predictive analytics. Like Morpheus said, free your mind.

In fact prescriptive analytics might just be the most misunderstood (hint: it’s not coming up with recommendations using the output of predictive analytics) and underused form of AI.

Pet peeve: people coming up with recommendations using the output of predictive analytics and calling it prescriptive analytics. Yes, you prescribed, but no new analytics was done. By that logic, all recommendations are prescriptive analytics and PPT is the best tool to do it. https://t.co/RtGZp8MkET

— Rithwik (@rithwik) May 16, 2019

Anyone who tells you to do deep learning before using some good old linear programming to prescribe you some optimizations, should be promptly escorted out of the premises without any delay before more damage is done.

So, apart from the side-effect of refuting some dogma, our attempt to match tools to decision phases seems to not have been too useful.

Can types of AI be matched against the decision contexts?

There are lots of decisions being made right now in your organization, and many of those have sub-decisions too. The OODA loop is fractal. Various stages of tactical and strategic loops are running at various levels simultaneously. Maybe looking at the context where the loop is running holds more promise.

The Cynefin framework classifies decision contexts into four types: obvious, complicated, complex, chaotic.

Most problems that end up as candidates for AI would be complicated or complex, so let’s focus on those. If it’s the other two, you might already know what to do, or you might just need to start with something and see what happens.

In a complicated context, at least one right answer exists. In a complex context, however, right answers can’t be ferreted out. It’s like the difference between, say, a Ferrari and the Brazilian rainforest. Ferraris are complicated machines, but an expert mechanic can take one apart and reassemble it without changing a thing. The car is static, and the whole is the sum of its parts. The rainforest, on the other hand, is in constant flux — a species becomes extinct, weather patterns change, an agricultural project reroutes a water source — and the whole is far more than the sum of its parts. This is the realm of “unknown unknowns,” and it is the domain to which much of contemporary business has shifted. — A Leader’s Framework for Decision Making, Harvard Business Review

The difference between the two is how amenable to analysis the cause-effect relationships are. Imagine complicated and complex as the two ends of a spectrum of causal opacity. If you were to draw the causal links for a complex system you wouldn’t know when to stop, and it would have reinforcing and regulating loops. Causes in complex systems are hard to pinpoint a priori, only revealing themselves in hindsight. Complicated systems on the other hand are saner — you do this, and see that happen. Which is why the Ferrari in the quoted example above can be repaired, but the rainforest can only be tended to.

Here’s my thesis: If the context is complex, advanced AI is better utilized as, to borrow from control theory, a sensor. If it’s complicated, it could be an actuator.

If the context is complex, advanced AI is better utilized as, to borrow from control theory, a sensor. If it’s complicated, it could be an actuator.

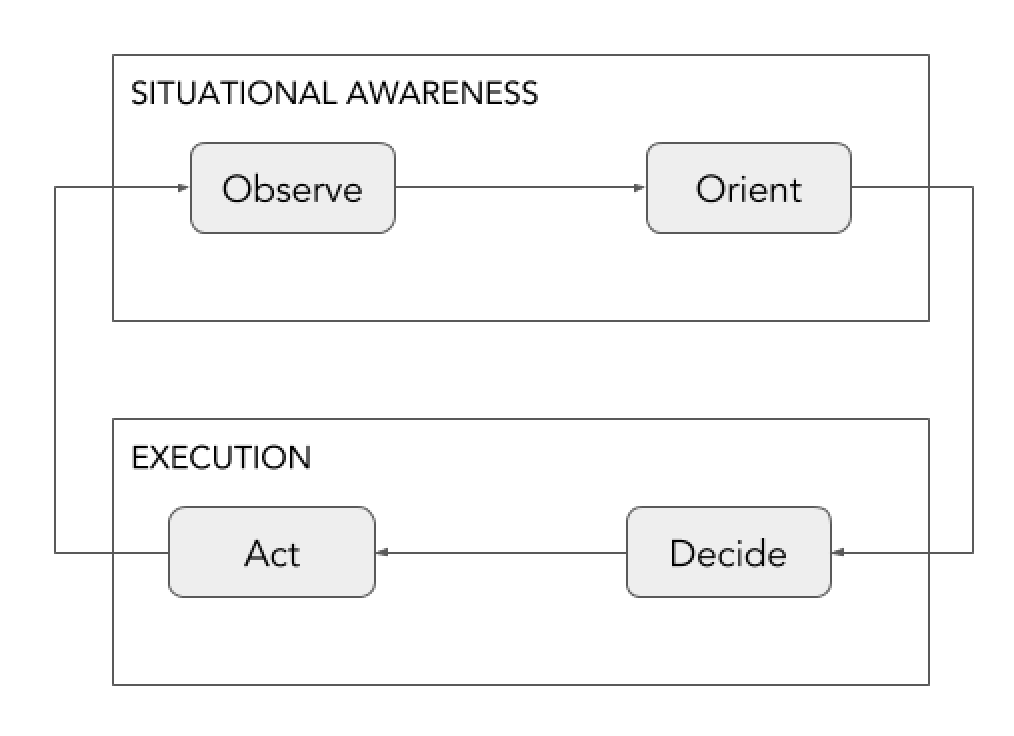

With the problem addressed being more complex, you are better off using the advanced techniques, if at all, to augment people by building more situational awareness than to automate execution. The decisions and actions would be more contextual and taken by humans.

On the other hand, for complicated systems, even if you aren’t yet aware of the workings of the system, there is hope that a machine could learn some approximation of it without achieving Artificial General Intelligence (AGI).

For the complex systems AGI or at least an enormous amount of data to compensate would be required. Cockpits come before autopilots, and UAVs come before robot generals.

And with that thesis in mind, here’s my corollary: one reason why attempts to use AI in business operations haven’t been too successful is because businesses, like all collections of interacting humans, are complex systems, and they have been trying to use AI to prescribe actions rather than to build situational awareness.

AI is more of a robot than an oracle.

The hype of AI makes people imbue it with magical, oracular capabilities of seeing the future in great detail. While the truth is, the future really is not that predictable, especially in complex contexts. AI is more of a robot than an oracle. It would do you good to not go about trying to solve complex problems like running a whole business with AI. Let the humans do what they are good at. Just augment them by improving their situational awareness first. Often, AI is premature optimization. And that, as Donald Knuth said, is the root of all evil.

A recent talk that was given at MIT called “ How to Recognize AI Snake Oil” has been doing the rounds lately. It mentions how AI can’t predict social outcomes, and in most cases simpler methods like manual scoring are just as accurate and far more transparent. I would put this failure down to the same issue of social systems being complex.

Meanwhile, for dealing with complex situations, there’s lot to learn from the history of militaries and governments. Those folks have been dealing with complexity since the time they have existed. As a thumb rule, keep clear of the hubris that make governments think they can plan and run complex ecosystems of people, and be agile like the maneuver warfare doctrines recommend.

Systems analysis has recommendations of where to intervene, and it seems the most impact would be at the level of mindset, goals, and rules. But quantitative methods can still help. The Cynefin framework recommends probe-sense-respond in complex contexts. This translates to having ways to learn from the changing environment. A/B tests and randomized controlled trials come to mind. But do keep in mind the new methods of causal inference which can help you do those right, and even put your observational data to good use as valid proxies. Use simulation approaches, and at the very least, keep your situational awareness high with good sensor capabilities and business intelligence.

We now have a new angle to look at problems and match appropriate tools to them. And so we get to the final bit — businesses everywhere want to use analytical tools but where do they begin?

Where do we begin?

It is obvious that to identify entry points we need to zoom out a little — to see the big picture, if you will. Two mental models that I find are useful for this are the Theory of Constraints and Wardley Maps. Both zoom out, but the former looks at the mechanics of our value chain, and the latter puts it into the context of the world.

Theory of Constraints

…was first put down in fable form by Eliyahu Goldratt in the book The Goal. The DevOps community might be familiar with the same ideas from The Phoenix Project which is sort of an adaptation. For an example of how this might be used for business strategy take a look at this article.

The basic principle is that any system with a goal has one constraint. Any improvement elsewhere is an illusion. That radically simplifies our choice of what to work on. Given a process, we must work on the limiting constraint at that time. Once you make progress there the constraint will shift and so will your efforts.

Okay, that is some guidance from a throughput point of view. The last one will provide some from a risk/reward point of view.

Wardley Maps

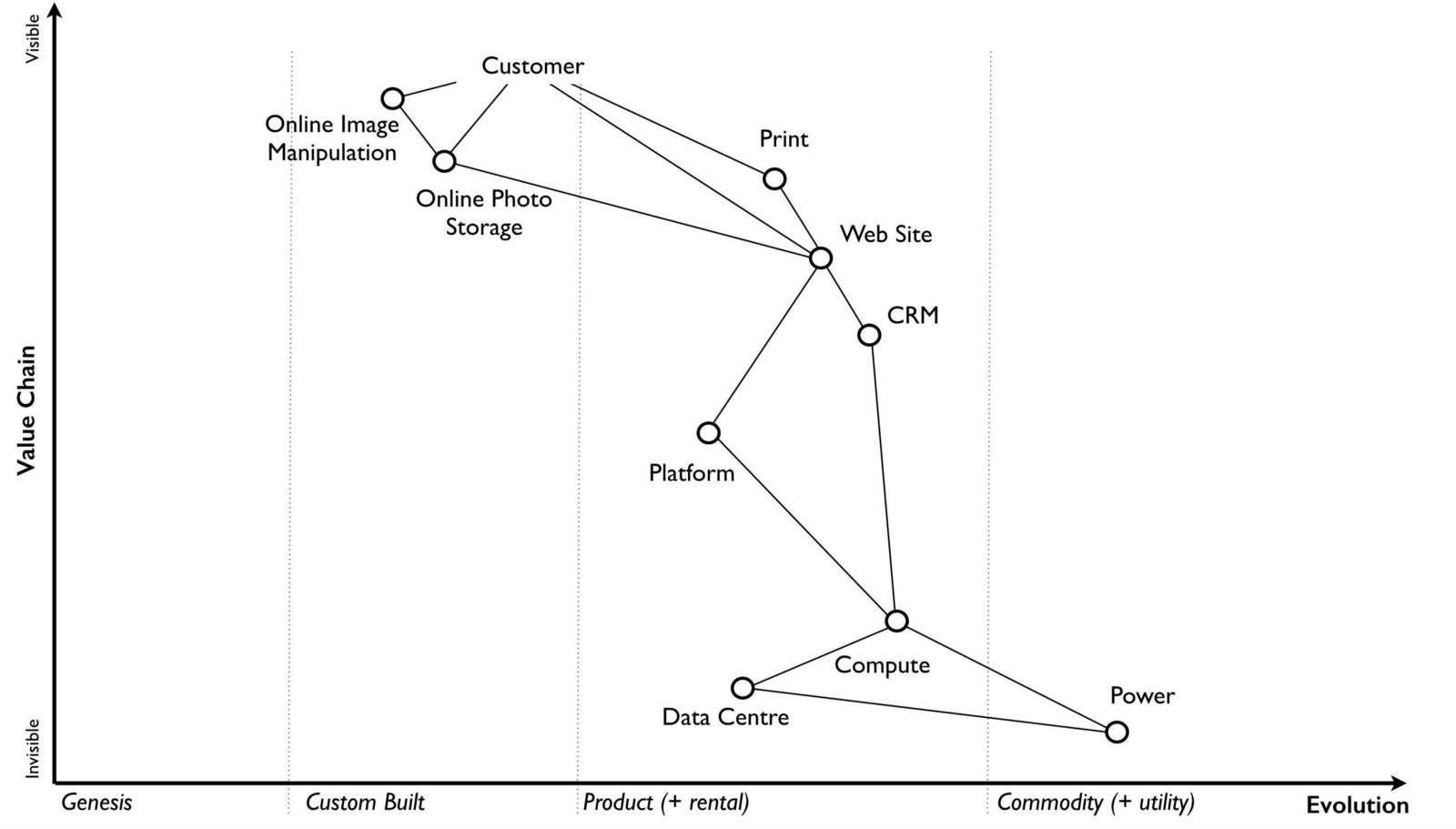

…is a tool for mapping your activities with ‘distance from user’ and ‘stage of activity maturity/evolution’ as the axes. Simon Wardley drew from the likes of Boyd and Sun Tzu to come up with the tool and the recommended gameplays. I won’t go into too much details here (this post is already getting a little too long) but you can check out the online book here.

Anyway, the point is you create a map like the one below, and it serves as a communication and decision aid. You can think about what to do with components based on their stage of evolution and customer visibility. For example, would it make sense to outsource the commodities? What are the evolutionary forces at work in your value chain, and how would it change how you operate?

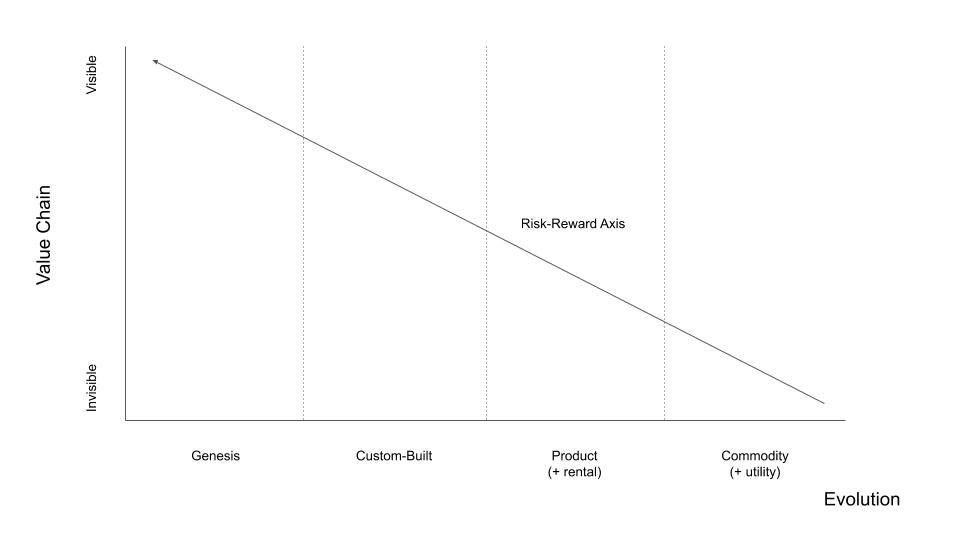

Now when you think about applying AI, the closer the activity is to the user and the more to the left the activity is, the more risk you are taking on, in exchange for chances of larger rewards.

So if you’d rather start with low risk problems for advanced analytics, start with the lower-right corner and move diagonally up till you get a problem with enough reward while also being in a sufficiently non-complex context (usually the complexity would increase as you move too).

And that, I think, is a good place to stop for now. We made a few connections between AI and strategy, and out of this exercise derived some guidance to think about AI and how to apply it.

Resources

- Boyd: The Fighter Pilot Who Changed the Art of War, Robert Coram

- https://taylorpearson.me/ooda-loop/

- https://hbr.org/2007/11/a-leaders-framework-for-decision-making

- https://www.cs.princeton.edu/~arvindn/talks/MIT-STS-AI-snakeoil.pdf

- http://donellameadows.org/archives/leverage-points-places-to-intervene-in-a-system/

- https://taylorpearson.me/business-strategy-framework/

- https://medium.com/wardleymaps

- https://medium.com/@erik_schon/the-art-of-strategy-26470e75e6ba