Speed and Quality

Escaping flatland

Recently I read Toyota Production System. There are later books which explore it more fully, but it was great to read Taiichi Ohno himself revealing the essence of the system. It is fascinating how just a few simple ideas were developed to the extent that they eventually were.

Anyway, this got me thinking about speed and quality. There are often vague images that form in our mind when we talk of concepts. They are useful, but dangerous, because they can limit what we are able to think. My guess is that a lot of times we picture speed and quality at two ends of a line. One can move towards one of them, thereby moving away from the other. Fast, bad work or slow, good work.

This is simplistic. After all, a more skilled worker can deliver something faster and with higher quality. So can the creation of a standard operating procedure. How do we fit those into this model?

We will see if we can replace this mental image with something else. Note that all models are wrong, and we are merely trying to increase utility by means of a richer mental image.

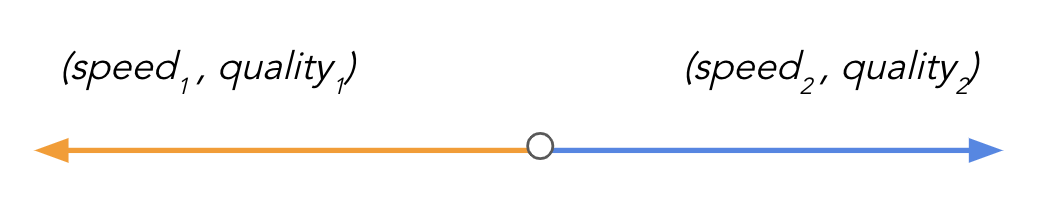

The first step is putting on the data hat and getting uncomfortable with seeing two quantities being represented on one line. The following is truer.

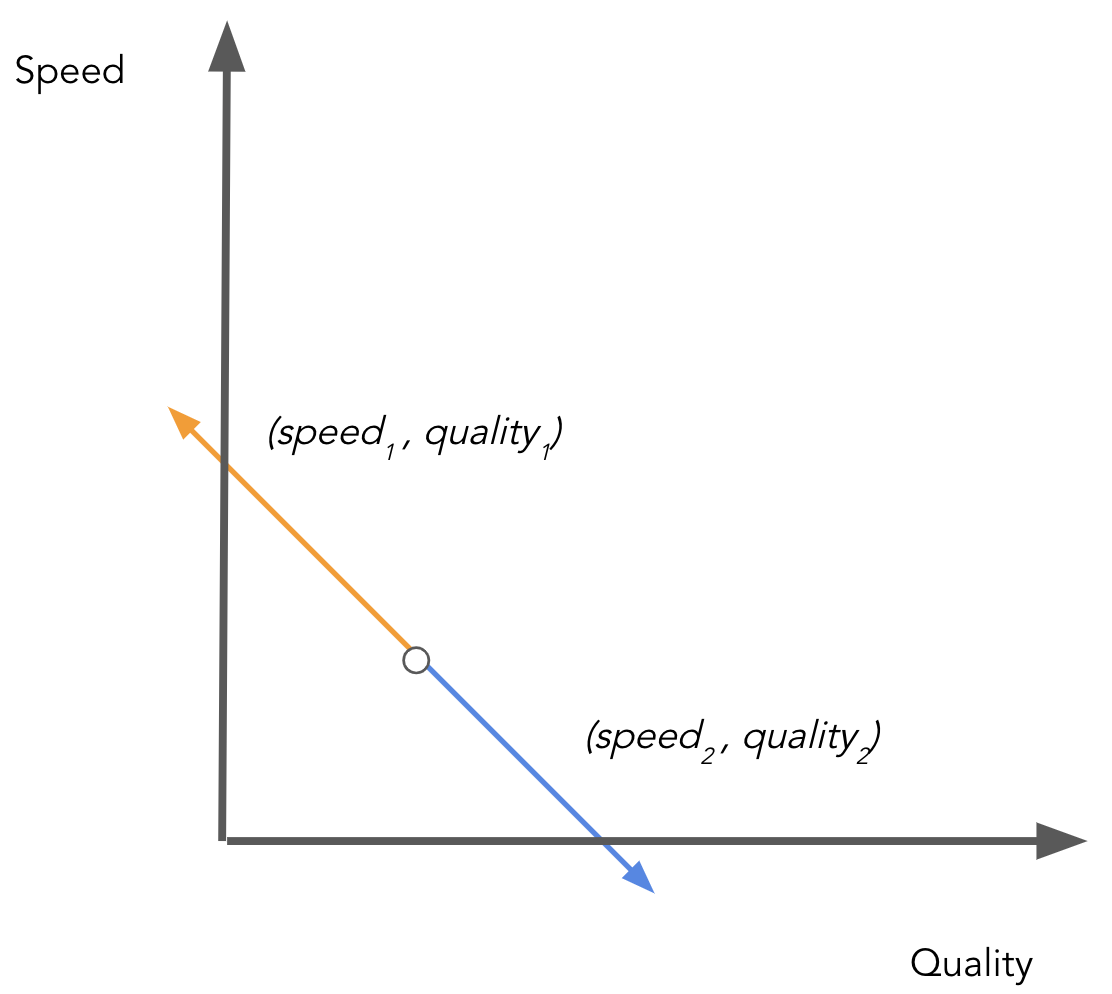

And once we are here, it isn’t much of a leap to put the line into a 2D plot.

This is interesting, and as we will see, empowering.

Along the line everything is as we expect: more speed implies less quality and vice versa. But notice that now more lines can be drawn. New lines which are lifted up represent the sort of improvements we couldn’t fit into our old model.

Already we have a richer representation of the trade-off, but we can also theorize about the forces which lift the lines. For individuals, we default to ‘skill’, but that doesn’t seem right for groups, so let’s settle on ‘ways of working’ for now.

The project management triangle says that you can choose only two out of good, fast, and cheap. And since we are already saying we want good and fast, this implies more cost.

This seems to disagree with our model as we are trying to increase both with same resources, but only if you interpret ‘cost’ narrowly. Typically cost is thought of in terms of more/better machines and workers. But consider broadening your interpretation of cost to include the psychological and social costs of changing behaviors. Ways of working are free (unless you voluntarily spend on coaches, training etc.) in the traditional sense, but more costly in the pyscho-social sense.

Deming should be familiar to data folks because he was a statistician himself, but mostly because he said something1 that is put at the beginning of far too many presentations. About quality problems, he said that 95% of them are deficiencies in the systems. It’s logical then to work on the system. The traditional costs focus on the components of a system, while more impactful interventions2 lean towards rules, goals, and culture, which is what ways of working are.

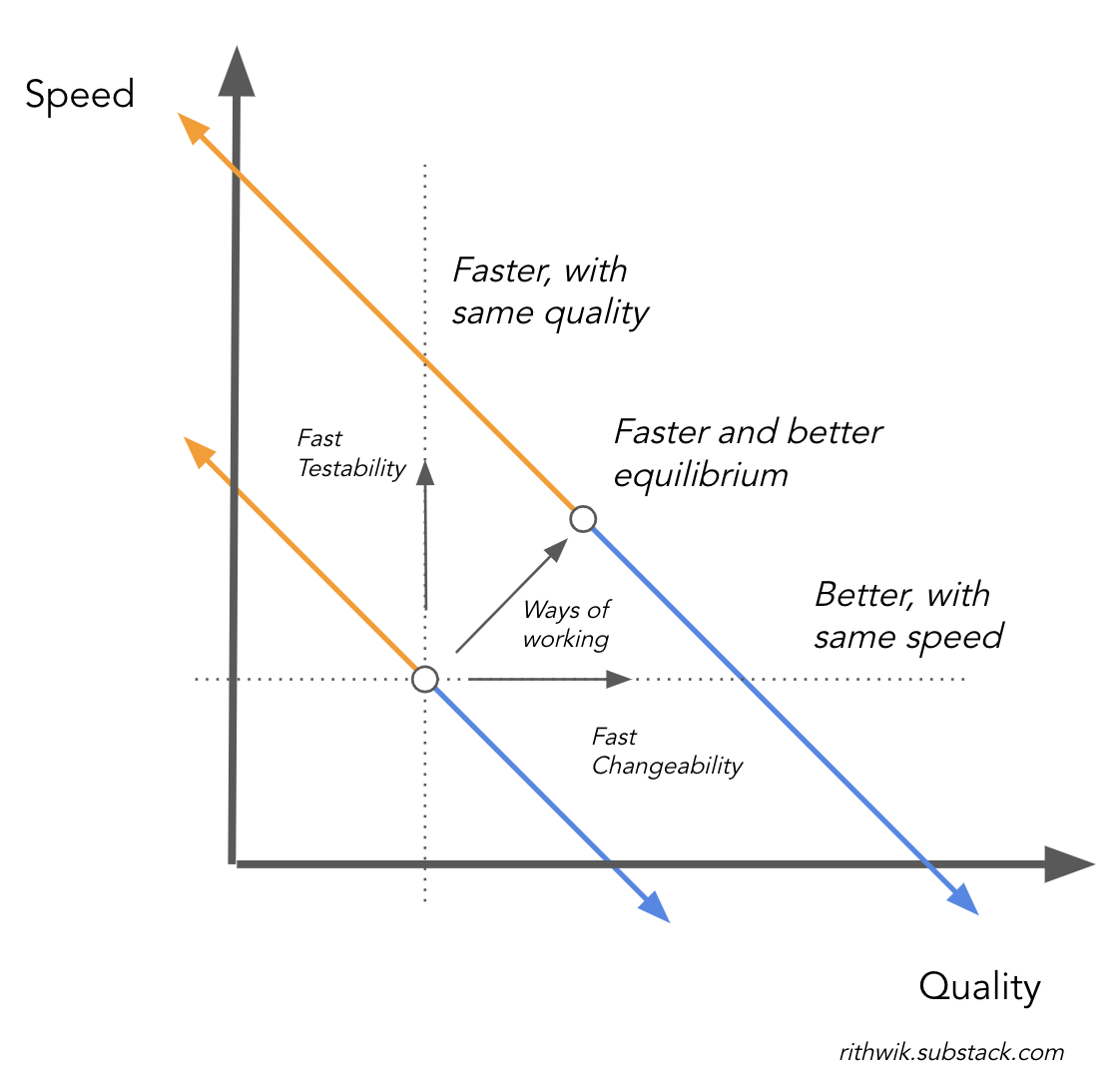

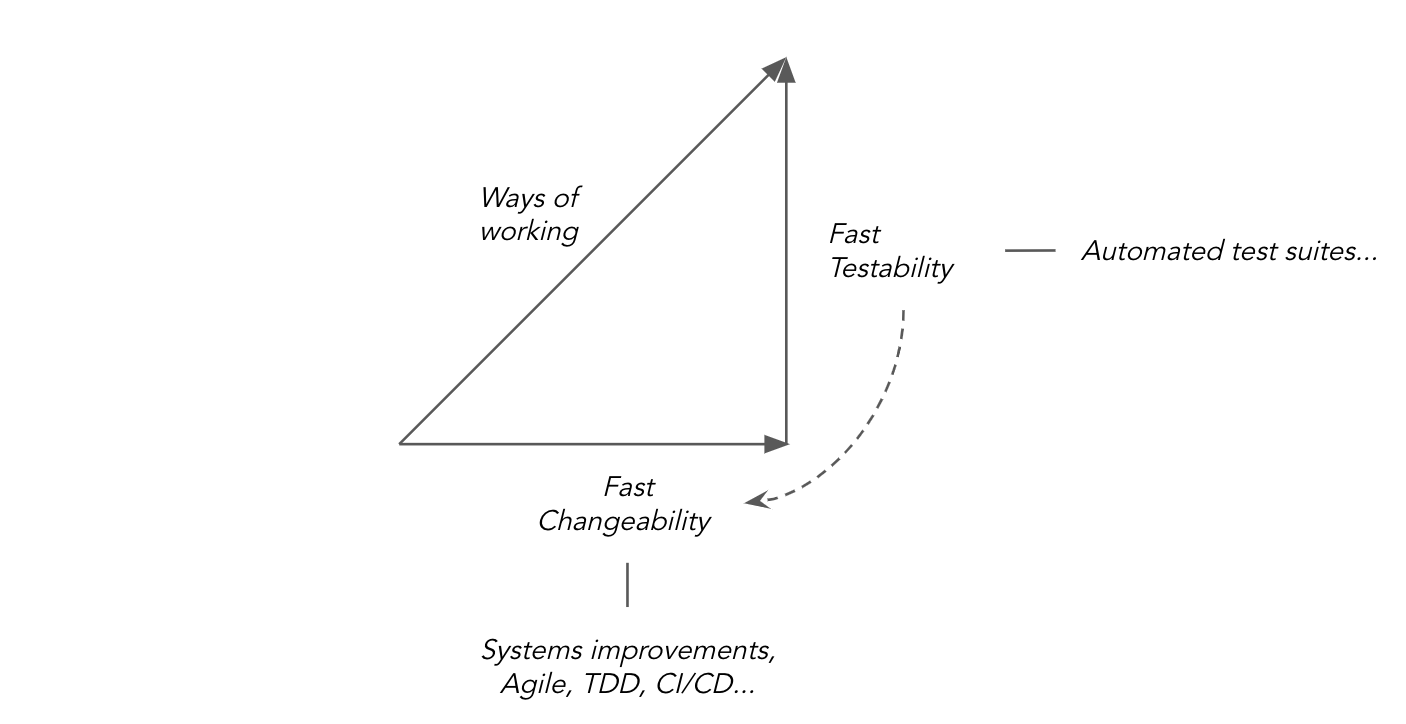

Let us zoom in and re-arrange the forces depicted in our new model. Ways of working is a combination of two types of interventions targeting one aspect of quality each.

Without getting too philosophical about what quality is, let’s say there are two aspects to it: quality of design and quality of build. In other words, building the right thing, and building it right. To that end, you need to make your work more quickly changeable and testable.

The testing aspect of quality assurance is so front and center in our minds that we notice only the quality of build angle, while ignoring the change-readiness to assure quality of design angle. In this incomplete view it is true that quality practices slow us down. But if you see both the angles the apparent slowness is an illusion. Imagine two cars, one slow and one fast, on a road with many bends. The fast one keeps hitting the rails and loses time in re-orienting while the slow one smoothly steers ahead reaching the end quicker. Like the special operations community says, slow is smooth, smooth is fast.

Tests are speeded up by automation, but how do we speed up changes? The answer seems to be pushing quality upstream into earlier processes and reducing batch sizes. That lies at the core of frameworks like lean, agile, Toyota production system, test-driven development, continuous integration, and so on. Notice that those aren’t what we typically think of when we talk about quality. Like I said, quality of build gets disproportionate attention. For quality of design, that is for faster changes, we must zoom out of a dedicated QA group to see the system.

When it comes to data science, changeability is even more critical. Every step in the process is provisional. Change is mandated by the stakeholders, the analyses ideas, model results, and the data itself. And yet data science codebases in the wild are often the the least easy to change.

Here are a few starter ideas to remedy this:

Write functions. It makes code testable and changeable by packaging functionality into reusable pieces.

Testability is useless without tests, and having lets you change things faster and with more confidence.

Move code out of fragile notebooks3 as soon as it makes sense to do so.

Sketch first, colour later. Delivering low fidelity versions and iterating makes things more changeable as you delay more permanent decisions.

“In God we trust, all others bring data.” Surprised you had to look, though. ↩︎

http://donellameadows.org/archives/leverage-points-places-to-intervene-in-a-system/ ↩︎

https://docs.google.com/presentation/d/1n2RlMdmv1p25Xy5thJUhkKGvjtV-dkAIsUXP-AL4ffI/edit ↩︎